The unexpected situation with COVID 19 pandemic forced businesses to shift to remote work that may bring certain inconveniences both to business owners and to regular staff workers. Accessing the work environment remotely may result in system slowness, and lack of productivity especially when there are a lot of connections, and the system is not adapted for remote requests. The idea of permanent work from home is not appealing to everyone, still, we have to face the fact that it’s our reality for the nearest future. In attempts to optimize the work from home, many organizations are looking for ways to make access to internal data easier. So here Distributed file systems (DFS) comes in handy.

What is DFS?

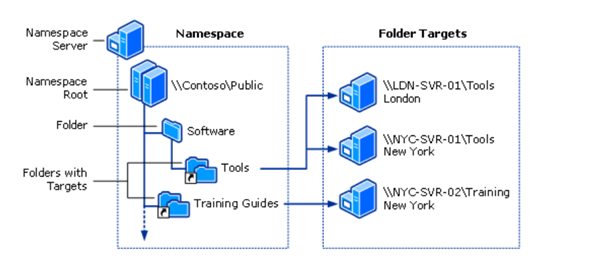

A Distributed File System is the combination of server-client services that are based on data redundancy (in other words replicating the data on several sources to increase their availability) and data location based on the namespace. The DFS Namespace allows to group the files hosted on one or multiple servers and gives the user overview of the distributed file system. In order to access these files Folder targets are used. They contain a server-based path to the actual location of the files that can be retrieved.

Image source: Microsoft The main purpose of DFS is to allow users to access remote files and modify them in the same way as they would do it with files located on their local PC. Once a file is requested a server delivers a file copy as a response, and this copy is cached on the client computer. Once the changes are done, this copy is sent back to the server to reflect the changes in the original file. While it may sound difficult, in fact, this solution is designed to streamline the process of working on shared data ensuring its transparency. The distributed file systems call for increased security measures. One of the ways is restricting the file accesses based on the user roles in the Active directory. It helps to protect sensitive data and utilize the least privilege model. Making the system available only within an internal network through a VPN and using data encryption is also required. DFS can be implemented locally or using Cloud-Based services. The success of the implementation of local systems depends on the skills of your IT staff. While both of these ways can bring great results, DFS file sharing solutions are more recommended for organizing big data massives. Even though Cloud services are costly, their management can be delegated to the vendor. That’s why you need to decide what investments will be more reasonable for your company.

How your organization can benefit from DFS

Uptime and ensuring data integrity

The data redundancy ensures high data availability and if the one SMB server experiences failure or downtime, the system remains online and data is fully operational.

Easy remote access

When a user logs into their workspace their remote file directories are accessed in the same manner as the local ones. Also, if the data is stored on multiple servers in different locations, it’s possible to ?reate rules to deliver these files from the closest server to speed up request processing time.

The vast range of applications

DFS has the majority of practical applications and serves as the basis for many industry standards like Network File System (NFS) protocol for sharing files in the same network, Hadoop Distributed File System, an open-sourced solution used for large data sets, Server Message Block protocols (SMB) responsible for file management and sharing. For sure, DFS is only one of the steps towards an effective remote workspace environment, yet one of the most powerful. And when it comes to the DFS evolution, trend experts predict that these systems become simpler in management and autonomous eliminating potential risks in the course of running operations. About the author: Katie Greene is a freelance writer from the US with a passion for technology, creative writing and personal development.